Envelopment and realism: Embracing immersive audio in sports broadcasting

Jungle Studio’s Steven Boardman [Credit: Rob Jarvis]

The popularity of over the top (OTT) broadcasting is really helping to drive the growth of immersive content, and this presents both opportunities and challenges for the broadcast audio world.

Mixing in immersive allows the audio engineer to create a sense of envelopment and realism like never before, but as channel count and mix complexity increases, the importance of neutral, uncoloured studio monitoring with precise imaging becomes even more important.

Here we’ll examine some of the principles of immersive audio, and some of the considerations that audio professionals need to be aware of.

The principles

Immersive audio formats not only surround the listener; they also encircle them in the height dimension too. One way to understand the capability of an immersive audio system is to describe how many height layers an immersive playback system offers. The two-channel stereo and conventional surround formats offer only one height layer, and this layer is located at the height of the listener’s ears, with all loudspeakers located at an equal distance from the listener (in terms of acoustic delay), and playing back at the same level.

The different channel layouts for immersive formats serve several purposes. One target is to create envelopment and a realistic sense of being inside an audio field. One height layer alone cannot create this sensation with sufficient realism because a significant part of the listening experience is created by the sound arriving at the listener from above. Therefore the extra height layers of a true immersive system provide this envelopment, and add a significant dimension to the experience.

The second aim for immersive systems used with video is to be able to localise the apparent source of audio at any location across the picture. This is the reason why the 22.2 immersive format (pioneered by NHK in Japan,) has three height layers, including the layer below the listener’s ears. Since the UHD TV picture can be very large, extending from floor to ceiling, the audio system has to be able to localise audio across the whole area of the picture.

Growth of immersive

With the demand for immersive content gaining momentum at increasing speed, several systems are competing for dominance in the world of 3D immersive audio recordings. The front-runners are now the cinema audio formats, who are trying to increase their presence in the audio-only area and enter the television broadcast market too.

While the cinema industry is always searching for the next ‘wow-effect’ to lure the audience from the comfort of their homes into theatres, the growth of immersive audio has been slightly slower in the world of television. However, the pace is really picking up now, with several companies studying 3D immersive sound as a companion to UHD television formats, with the International Telecommunication Union (ITU) issuing recommendations about the sound formats to accompany UHDTV pictures. In preparation for the delayed Tokyo Olympic Games, NHK has already started to deliver 8K programming, with 22.2 audio.

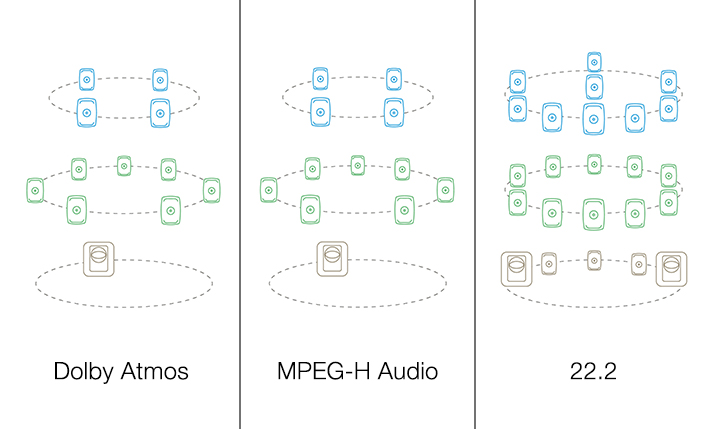

Figure 1

How many layers?

We touched on this earlier, but modern immersive formats offer two or three height layers, while current cinema formats offer two and the emerging broadcasting formats have three or more. Figure 1 shows the configurations of three popular immersive broadcast formats.

One of the height layers is always at the height of the listener’s ears, and this typically creates a layout with backwards compatibility to both surround formats and basic stereo. Typically, other layers are above the listener and, as previously mentioned, layers can also be located below the listener, to enhance the sense of envelopment.

Certain encoding methods for broadcast applications can compress 3D immersive audio into a very compact data package for storage or transmission to the customer. These formats offer an interesting advantage over the many immersive audio formats, since the channel count and the presentation channel orientations can be selected according to the playback venue or room. Essentially any number of height layers and density of loudspeaker locations can be used, and furthermore, this density does not need to be constant.

Creating the feeds for loudspeakers dynamically from the transport format is called rendering. The compact audio transport package is decoded and the feeds to all the loudspeakers are calculated in real time while the immersive audio is played back in the user’s location. This compact delivery format plus the freedom to adjust and optimise the number and location of the playback loudspeakers makes these flexible formats very exciting.

Common assumptions

Popular immersive audio playback systems typically share two assumptions about the loudspeaker layout and one assumption about the loudspeaker characteristics. Concerning layout, it is assumed that the same level of sound will be delivered to the listening location from all loudspeakers, and the time taken for the audio to travel from each loudspeaker to the listener will also be the same. If each loudspeaker in the system has similar internal audio delay, then this can be achieved by positioning each loudspeaker at an equal distance from the listening position. Otherwise, electronic adjustments of the level and delay are required to align the system.

Concerning loudspeaker characteristics, a fundamental assumption is the similarity of the frequency response for all the loudspeakers in the playback system. Sometimes this is taken to mean that all the loudspeakers in the system should be of the same make and model. In reality, loudspeaker sound is affected by the acoustics of the room in many ways. This can significantly change the character of the audio signal, so that even when the same make and model of loudspeaker is used throughout the system, the individual locations of the loudspeakers will change the audio in a way that renders each loudspeaker performance slightly different.

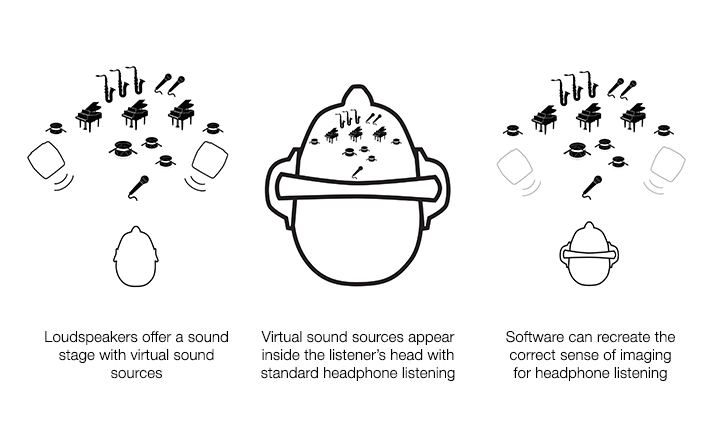

Figure 2

Getting aligned

To turn these assumptions into reality, it is essential that any immersive loudspeaker monitoring system is accurately calibrated for the acoustic space that it’s located in. Happily there are now modern software tools that will help achieve this in a fraction of the time that traditional manual calibration methods would take. Some types of loudspeaker manager software, for instance, allow the alignment of levels and time of flight at the listening location, subwoofer integration and compensation for the acoustical effects of loudspeaker placement. This ensures that all the loudspeakers in the system deliver a consistent and neutral sound character.

For the audio engineer, this will improve both the quality of the production and the speed of the working process, allowing them to produce reliable mixes that will translate consistently to any playback medium.

There may be situations where the use of headphone monitoring is required, particularly for mobile audio professionals working remotely in ad-hoc environments. Headphones, however, break the link to the natural mechanisms that we have acquired over our lifetime for localising sound. This causes sound to appear ‘inside’ our head when presented over headphones, rather than appearing all around us. See figure 2.

Here, software technology has emerged recently that can play a key role by modelling the acoustics of the user’s head and upper torso, based on data extracted from a simple 360º smartphone video. This then allows the creation of a personalised SOFA file that the user can integrate into the audio workstation’s signal processing for the headphone output. This makes the immersive headphone listening experience much more truthful and reliable, with a far more natural sense of space and direction.